How Zip builds agentic AI for how procurement really works

William Yan on 5 principles for designing agents that solve actual challenges.

The AI space at the moment feels like it’s flooded with hype, promises of a sci-fi future of fully-autonomous robots doing it all. But technology for technology’s sake doesn't solve real problems, and AI still can’t, and oftentimes shouldn’t, automate everything.

As we build our Agentic AI platform here at Zip, we’ve taken a deliberate, first-principles approach. It’s a philosophy born not from what AI could do, but from what our customers need it to do.

We’ve developed five core principles that help us build our agentic AI platform, which start with real customer pain points rather than just a need to flex our admittedly cool technology. Here’s how we build AI that really moves the needle for procurement.

Principle 1: Ground yourself in real customer problems first

The first thing we want to make sure is that we truly understand real customer problems. We never want to take the approach of “Hey, here’s some cool new tech, how do we shove it into different areas of our product, and throw some glow on it?” and use that as the lens for development. Instead, we source opportunities from multiple sources and touch-points.

Take, for example, our ‘data validation agent.' This agent concept came directly from a customer problem we heard time and time again, where customers were describing real, time-consuming issues they faced every day. This approach has become one of our most important lenses for finding the right opportunities; build solutions for the problems that keep procurement teams mired in labor.

Technology should solve real problems, rather than fall for the trap of tech-first, use case-later.

Principle 2: Rigorously assess ‘Agent-Problem Fit’

Agents are good for some things and not good for others. We apply strict considerations around the fit with today’s available technology. Agents require very clear instructions and work best with tasks that lend themselves to a clearly defined playbook.

We look for work that can be boiled down into a clear set of instructions, whether that be research, analysis, or systematic review processes. The goal is to find tasks that are typically done at a high-volume, and are highly repeatable. These represent prime agentic automation opportunities.

Principle 3: Design for trust with a Human-in-the-Loop approach

As much as we'd like to believe AI is 100% reliable today, there's obviously that trust factor. So another lens we always consider is when and how we bring humans into the loop effectively.

We shy away from use-cases like complex math or high-stakes forecasting, because we know AI is capable of making mistakes. Instead, we gravitate toward use-cases that have a bit more room for variability, like content generation, where there’s often more than one right answer.

We’re building experiences oriented around climbing this ladder with our customers and meeting them where they are. For these early experiences, we’re building in a way where a human is ‘in the loop,’ able to review the work. We really are trying to make your jobs easier, not replace them.

We’re starting with human oversight, to earn the trust for autonomy over time.

Principle 4: Optimize for systemic thoroughness

We focus on use cases that take our customers a lot of time, tasks that our agents can systematically go through with clear instructions to do the work more quickly yes, but oftentimes more thoroughly. This is really powerful.

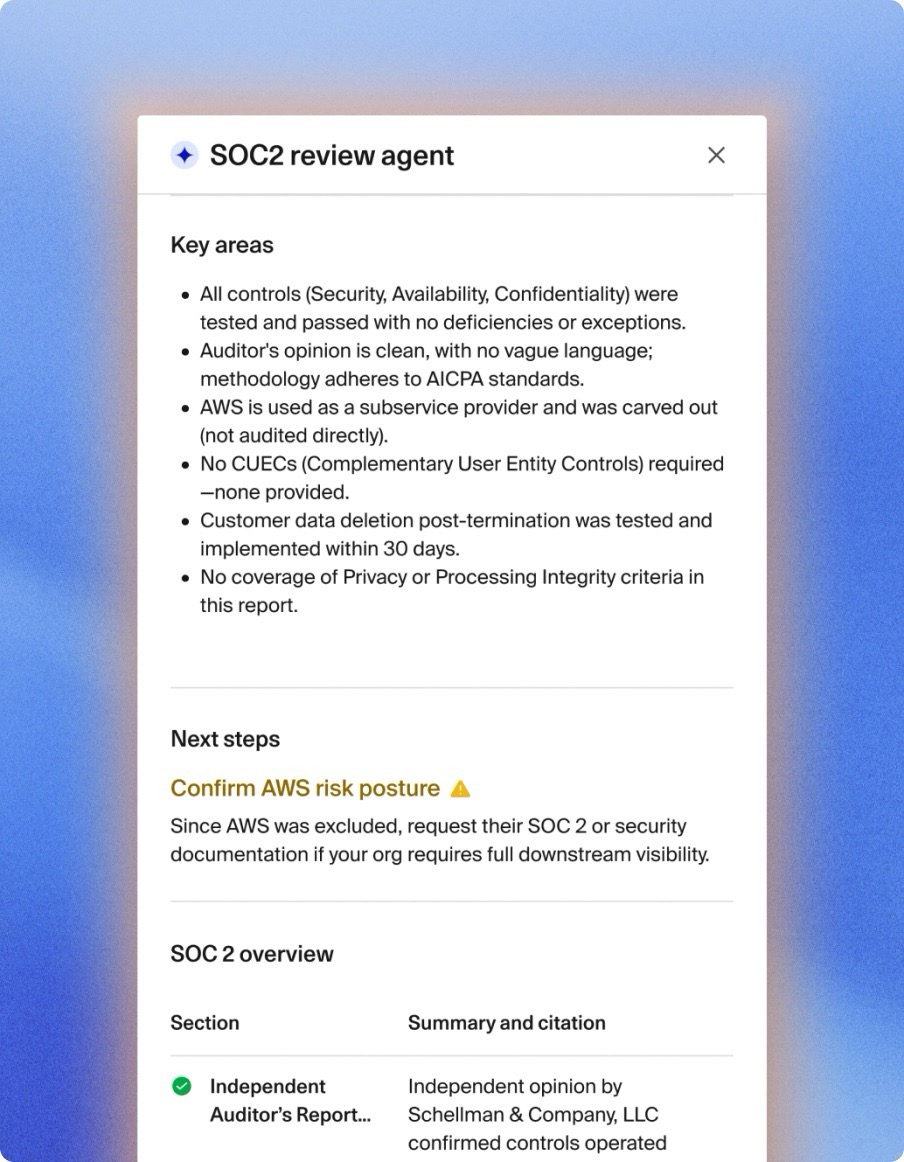

Take SOC2 reviews as an example. We learned from customers that when you review a SOC2 in reality today (sometimes 60+ pages!) we really just have to prioritize. You’re often not reading the whole thing. You’re kind of just prioritizing certain areas based on the request and context, and there’s a lot of judgement involved.

This is where our SOC 2 review agent really shines. They don’t need to make those judgement calls about what to skip. They can read everything in detail, flag relevant issues, and provide comprehensive analysis that humans simply don’t have time for.

Agents excel in areas where humans often cut corners due to time constraints.

Principle 5: Build flexibility, not fixed solutions

We wanted to build a flexible agent platform rather than going through and building specific use cases one by one. We realized every company has a slightly different approach to everything.

So we built our agents in a way where we can provide customized instructions in a very configurable way, focusing on foundational building blocks. Skills like document analysis and web research can be applied to different use cases depending on the instructions you provide.

Depending on the nature of the analysis and the risk involved, we carefully decide what level of autonomy to give these agents. Do we want them to take action? Just making recommendations? Or simply presenting facts and findings? This spectrum allows us to match the agent's role to both the customer's comfort level and the inherent risk profile of the task.

Putting the Zip agentic orchestration platform together

These five principles work together to create AI agents that actually move the needle for procurement teams.

By starting with genuine customer pain points, carefully considering agent-problem fit, building in appropriate human oversight, optimizing for thoroughness, and designing for flexibility, we're creating solutions that augment human capability rather than replace human judgment.

AI is here, and it's an incredibly powerful tool when used, and built, correctly. At Zip, we take great care to ensure this systematic thoroughness and speed is applied to the right problems. This future starts by understanding the real procurement challenges our customers face every day.

To learn more about Zip’s agentic procurement orchestration platform, request a demo today.

Maximize the ROI of your business spend

Enter your business email to keep reading

.webp)