We’ll walk through some of the key challenges we encountered in importing AWS resources into Python-based CDKTF, and how we tackled these issues to build a scalable and consistent Infrastructure as Code (IaC) solution.

It’s important to note that CDKTF functions similarly to Terraform, except for defining resources in Python instead of HCL. With our TACOs, it still follows a plan -> apply workflow for making changes, and we may use them interchangeably throughout this post.

Resources that aren’t included in the state file are "untracked" by CDKTF, which can cause issues. For example, if you try to create an IAM policy object new-iam-policy in CDKTF but that policy already exists in AWS, CDKTF will error when trying to apply changes:

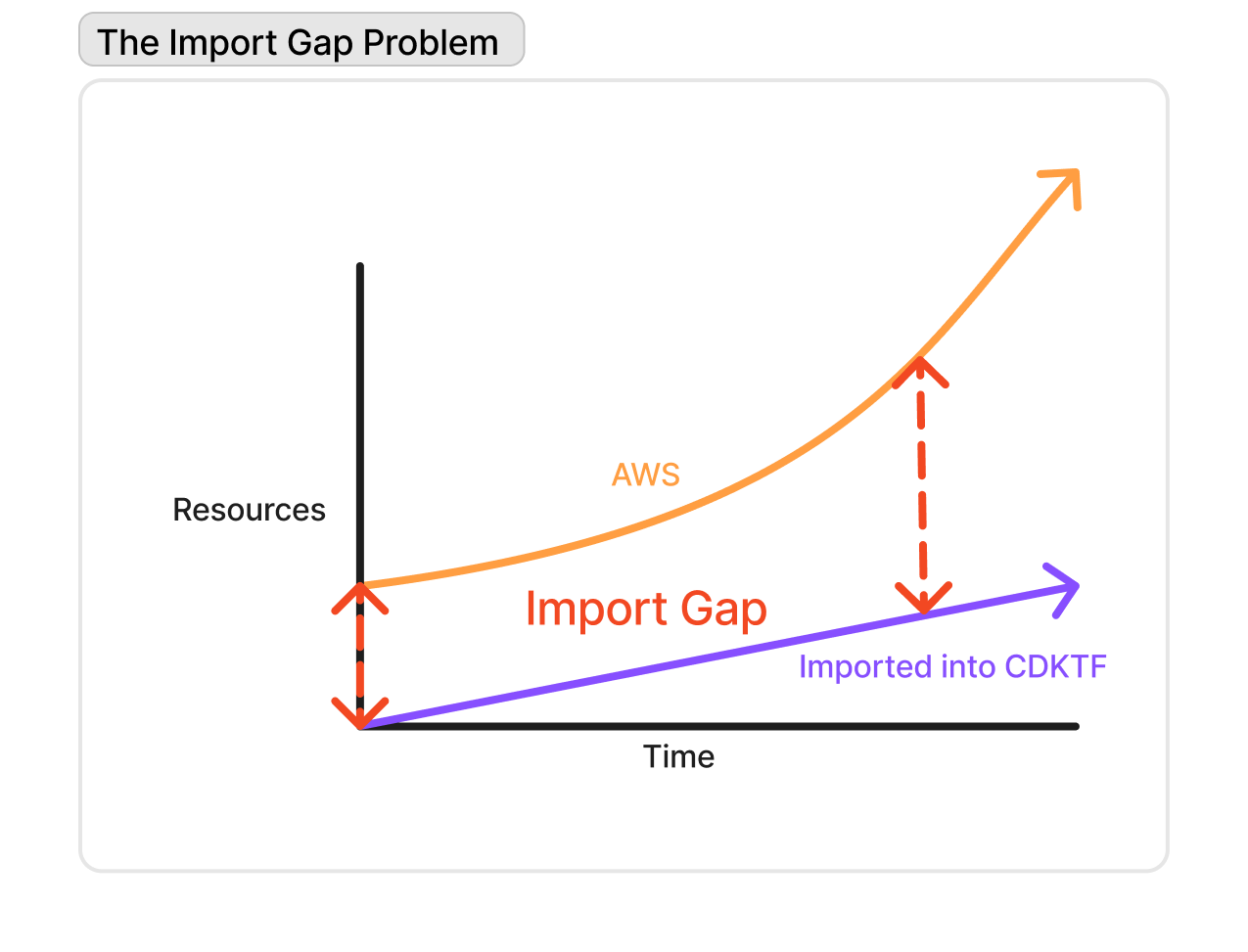

Our primary goal was to import our resources and generate Python CDKTF code automatically for our existing AWS infrastructure. With over 250 AWS resources to migrate at the time, manual coding and importing from scratch would have been time-consuming, error-prone, and tedious. Several challenges complicated the import process:

All of these had to be addressed while our AWS infrastructure was growing and changing each day - the goal was to catch up and close the import gap in time before it grew too large.

One of the major challenges was ensuring that the state file and CDKTF code remained synchronized. Any misalignment could lead to configuration drift, where the CDKTF code no longer accurately represents the live AWS infrastructure. Importing a resource one by one and updating the state file and the codebase is the safest way to do this, but requires manual work and thus isn’t scalable. Importing too many resources at once could lead to errors ranging from Python syntax mistakes, CDKTF compilation issues to problems when applying code changes—each of which could halt progress entirely, and would need to be resolved before importing more resources to avoid configuration drift. This also had to be done while developers were continuing to create/update more AWS resources without interference.

Our team wanted to use secure templates for the imported resources—templates that adhered to Zip’s best practices for security and Python code quality. Adjusting templates during the import process would have been risky, potentially leading to configuration drift or CDKTF errors, especially if the templates required updates to existing resources. For example, enforcing a default bucket policy on all S3 buckets would complicate matters if any pre-existing buckets lacked a bucket policy. This would result in needing to not only import the S3 buckets but also modify them mid-import—a situation that we wanted to avoid.

Most existing tools for importing AWS resources into Terraform were inadequate for our needs, especially when importing into Python CDKTF. We evaluated the following import tools, but no tool provided the automation we needed without requiring significant manual tweaks, particularly when importing resources into our secure templates:

| Tool |

Pros |

Cons |

| Terraformer |

|

-

Generates a separate state file that needs to be merged with the current state file (risky!)

-

Outdated and unmaintained (while there was a release on 3/26/25, the last release before this was 7/3/23 which was the version we used during the import process), which led to incompatibilities with the syntax of resources in Terraform

-

Only generates HCL code

|

| CDKTF import |

|

-

Needs to create all resources individually in CDKTF before importing them one at a time

-

Process needs to be run locally which is messy with remote state

-

Still can generate deprecated CDKTF code

|

| Terraform Import CLI |

Automatically handles Terraform state |

|

| Terraform Import Code Blocks |

Automatically handle Terraform state |

|

With these pros/cons in mind, it was clear that even if we committed to one tool, we’d require additional work to configure/update the code after running the tool and it wouldn’t be sufficient by itself for our importing needs.

Our Solution

Our solution for importing our resources involved breaking up the import process into several phases. This allowed us to keep our IaC codebase running while incrementally importing resources and dividing up the different phases of work among different teammates:

- Import AWS Resources into Baseline CDKTF Code

We first pulled our AWS resources into baseline CDKTF code and imported them into the Terraform state.

- This allowed us to immediately benefit from Infrastructure as Code (IaC) while maintaining consistency between our Terraform state and codebase after the import.

- It also enabled us to incrementally enhance the CDKTF code quality, allowing us to develop secure templates and migrate to them at a later stage.

- Writing Secure CDKTF Templates

We created secure CDKTF templates that aligned with our internal security standards and could be used for all instances of a specific resource (e.g. IAM resources would be handled in one template, s3 buckets in another). - Migrating Baseline CDKTF code to Secure CDKTF Templates

Finally, we transitioned our generated CDKTF code to utilize the secure templates.

Breakdown of our Solution

Let’s dive deeper into each step:

1. Importing AWS Resources into Baseline CDKTF Code

We developed a custom script to automate the import process, it:

- Queries the AWS Boto3 API to retrieve all resources of a specific type in a given region.

- Outputs a list of resource names and base Terraform (HCL) code.

- Uses the terraform import CLI command to bring the outputted resources into our Terraform state.

- Uses cdktf convert to generate baseline CDKTF code for the imported resources (this command converts HCL code into CDKTF code).

This ensured that the Terraform state was updated correctly and generated (mostly working) CDKTF code that we could quickly tweak to allow CDKTF to properly compile and run. We used this process to generate HCL before CDKTF instead of CDKTF directly because it is easier to construct HCL code than CDKTF code from AWS resources, and ultimately helps minimize errors in conversion when going from AWS resource -> CDKTF code.

Although building the script itself required some time, it enabled us to handle the import process at scale for all AWS resources in one go. We anticipate that AI tools will improve this area, potentially enabling the automatic generation of pre-constructed baseline code or boilerplate infrastructure.

We chose to generate baseline Python CDKTF code rather than immediately map resources to our secure templates, because the templates were still in development. Our priority was to codify all AWS resources and ensure they were accurately reflected in our Terraform state to avoid infrastructure drift. This approach gave us the flexibility to update the templates before migrating to them.

Below is an example of how we used the AWS API to fetch all EC2 security groups and generate HCL code that then gets passed into cdktf convert to get the baseline CDKTF code.

import boto3

ec2_client = session.client('ec2')

def get_all_security_groups():

"""Fetch all security groups."""

response = ec2_client.describe_security_groups()

security_groups = response['SecurityGroups']

return security_groups

def write_tf_file(sg_id, sg_name):

"""Generate a Terraform file for importing the security group."""

tf_filename = f"sg_{sg_id}.tf"

tf_content = f"""

resource "aws_security_group" "{sg_name}" {{

# Placeholder for security group {sg_name}

# To import this security group, run:

# terraform import aws_security_group.{sg_name} {sg_id}

}}

"""

with open(tf_filename, 'w') as tf_file:

tf_file.write(tf_content)

print(f"Terraform file generated: {tf_filename}")

def print_security_group_details(sg_id, all_security_groups):

"""Print details of a security group by its ID and create Terraform file."""

for sg in all_security_groups:

if sg['GroupId'] == sg_id:

sg_name = sg['GroupName'].replace(" ", "_")

print(f"{sg['GroupName']},{sg['GroupId']},{sg['Description']}")

write_tf_file(sg_id, sg_name)

def print_terraform_import_commands(security_groups):

"""Generate and print Terraform import commands for used security groups."""

for sg in security_groups:

# Terraform resource name format based on security group name and ID

resource_name = sg['GroupName'].replace('-', '_').replace('.', '_')

# Printing the terraform import command

print(f'terraform import aws_security_group.{resource_name} {sg["GroupId"]}')

2. Writing Secure CDKTF Templates

As we codified the infrastructure, we simultaneously developed secure templates that wrapped the default CDKTF modules. We wanted to enforce secure defaults, like network and encryption configurations for RDS instances and S3 buckets. Additionally, by creating our own templates, we could fully leverage the strengths of Python, such as inheritance, robust libraries, and cleaner class structures.

We covered this topic extensively in our previous blog post. We developed and finalized secure templates for S3, Security Groups, RDS, Secrets Manager, and IAM. This was done in parallel with the import process described in step 1 so that we were ready for step 3.

Below is a sample of our secure CDKTF template for AWS Security Groups:

# templates/security_group.py

class SecurityGroup(CdkSecurityGroup):

"""AWS Security Group."""

"""

Constructs a new Security Group, along with its rules.

:param stack: CDKTF stack.

:param group_name: Name of the security group.

:param description: Description of the security group.

:param tags: Tags to apply to the security group.

:param vpc_id: ID of the VPC where the security group will be associated.

:param rules_config: List of rule configurations (dictionaries) that define ingress and egress rules.

"""

def __init__(

self,

stack,

group_name: str,

description: str,

tags: dict,

vpc_id: str = None,

rules: list | None = None,

provider=None,

):

if rules is None:

rules = []

egress = []

ingress = []

terraform_name = group_name.replace(" ", "_")

for rule in rules:

if rule["type"] == "egress":

egress.append(

SecurityGroupEgress(

cidr_blocks=rule.get("cidr_blocks", []),

description=rule["description"],

from_port=rule["from_port"],

ipv6_cidr_blocks=rule.get("ipv6_cidr_blocks", []),

protocol=rule["protocol"],

security_groups=rule.get("security_groups", []),

to_port=rule["to_port"],

self_attribute=rule["self"],

)

)

elif rule["type"] == "ingress":

ingress.append(

SecurityGroupIngress(

cidr_blocks=rule.get("cidr_blocks", []),

description=rule["description"],

from_port=rule["from_port"],

ipv6_cidr_blocks=rule.get("ipv6_cidr_blocks", []),

protocol=rule["protocol"],

security_groups=rule.get("security_groups", []),

to_port=rule["to_port"],

self_attribute=rule["self"],

)

)

super().__init__(

stack,

id_=terraform_name,

name=group_name,

description=description,

vpc_id=vpc_id,

revoke_rules_on_delete=False,

tags=tags,

egress=egress,

ingress=ingress,

provider=provider,

)

3. Migrating Baseline CDKTF code to Secure CDKTF Templates

With both the baseline CDKTF code and secure templates in place, our final task was to migrate the existing code to use the secure templates. This step was straightforward and involved mapping Python changes across all resources. We engaged the entire security team, including non-engineering members (security compliance program managers and IT engineers), to help with the migration—a testament to how approachable and efficient the process was. The migration was scheduled over a 1 month period with a strict cutoff date at the end of the quarter, in order to fully switch over from using the AWS console for these resources to managing them with CDKTF instead.

Below is a comparison: the baseline CDKTF code on the left and the migrated secure template code on the right.

from cdktf_cdktf_provider_aws.security_group import (

SecurityGroup,

SecurityGroupEgress,

SecurityGroupIngress,

)

SecurityGroup(

stack,

"Opensearch_production",

description="Allows access to production opensearch from production kubernetes",

vpc_id="vpc-production-id",

ingress=[

SecurityGroupIngress(

cidr_blocks=[],

description="",

from_port=443,

ipv6_cidr_blocks=[],

protocol="tcp",

security_groups=[

"sg-redacted",

"sg-open-internal",

"sg-another-one",

],

to_port=443,

)

],

egress=[

SecurityGroupEgress(

cidr_blocks=["0.0.0.0/0"],

description="",

from_port=0,

ipv6_cidr_blocks=[],

protocol="-1",

security_groups=[],

to_port=0,

)

],

)

This is the migrated code using our secure template:

from templates.security_group import SecurityGroup

# group: Opensearch production

opensearch_production_rules = [

{

"type": "ingress",

"from_port": 443,

"to_port": 443,

"protocol": "tcp",

"cidr_blocks": [],

"description": "",

"security_groups": [

"sg-redacted",

"sg-open-internal",

"sg-another-one",

],

"self": False,

},

{

"type": "egress",

"from_port": 0,

"to_port": 0,

"protocol": "-1",

"cidr_blocks": ["0.0.0.0/0"],

"description": "",

"self": False,

},

]

SecurityGroup(

stack,

group_name="Opensearch production",

description="Allows access to production opensearch from production kubernetes",

rules=opensearch_production_rules,

tags=tags,

)

As seen in the template, we still specify the core details of the Security Group - the ingress and egress rules, the resource name, but we can abstract away other optional variables (still can be specified when needed), allowing other users to easily read and understand the Security Group and make updates as necessary. The custom template also allows end users to avoid needing to go through the CDKTF docs for classes to instantiate and use (e.g. SecurityGroupEgress and SecurityGroupIngress) and can instead use Python dicts to represent those instead.

Conclusion

In one quarter, we progressed from zero IaC to a fully operational Python CDKTF codebase. We developed five production-quality modules for IAM, S3, RDS, Secrets Manager, and Security Groups, migrated over 250 critical AWS resources across four AWS accounts to CDKTF, and reduced the number of AWS administrators by 95%. Our custom importer script was a key factor, saving countless engineering hours by automating the resource import process and breaking it into manageable phases.

A big thanks to the team at Zip for making these achievements possible:

- Ashish Patel for designing the CDKTF import script

- Eric Zhang for supporting the overall IaC migration process, reviewing the secure architecture design and coordinating the migration.

- The rest of Zip’s Security Team (Amir Shahatit, Poorva Joshi, Piru Chheang) for assisting in the CDKTF migration

- Chris Zhen, Kaifeng Yao, and the rest of Zip’s infrastructure team for providing feedback on our plans and being the first users of our IaC.

We are also hiring! If you’re interested in joining our team at Zip, please reach out and apply at https://ziphq.com/careers!